In my previous blog article, I explained how to use Firefox plugin to automate the tedious web tasks. In this article I will explain how to use Linux command curl and bash scripts to automate the web tasks and it is faster than using the plugin and the code can be modified to suit other web automation tasks as well.

Objective

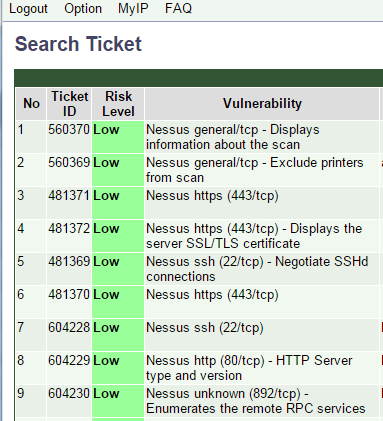

Our task is to update about 300 network security tickets generated by the network scanner Nessus in the web form and submit them using curl instead of manually click the submit button. It would take an hour to do the job by hand and now by using curl, it only takes less than 30 seconds.

First the complete code is here

#!/bin/bash

#

# curl parameter explained:

# -s, silent mode

# -D, write the response http header to file or stdout by using "-D -"

# -X, HTTP GET,POST,PUT,DELETE method

# --data, http POST data contents

# -c, save the cookies to file path

# -b, use the cookes from file path

# -o, output response to file path

#

# Credentials for the NUS SVMS website login

username='your_user_name'

password='your_password'

# This string will be used to locate the content/lines we want to extract from the html response

search_string="Search Ticket Result"

# Get cookies from website by send POST API request to the login web page

curl -s -D - -X POST --data "userid=$username&passwd=$password&submit=Login" -c /tmp/cookies.txt \

https://svms.nus.edu.sg/login -o /dev/null

# Use obtained cookies to access the ticket information API web page by GET method

# Save the response html content to a local file for parsing data

curl -s -D - -X GET --data 'status=unresolved' -b /tmp/cookies.txt \

https://svms.nus.edu.sg/ticket/search-ticket?status=unresolved -o /tmp/output

# The start Line number of the html response file, can ignore the previous lines

start_line=$(grep -n "$search_string" /tmp/output | cut -d ":" -f 1)

# Parse the ticket number count from the html response file

ticket_no=$(grep -n "$search_string" /tmp/output | cut -d ":" -f 2 | cut -d "-" -f 2 | cut -d " " -f 2)

# First skipped lines from start line to the ticket id location line

first_skip=29

# Subsequent skipped lines from ticket id to ticket id

skip=31

# First ticket id line number

line=$((start_line+first_skip))

#echo $line

# Loop through all the html content and based on each line number of the ticket id

# Send the API POST request to update the ticket information

# If the update is successful, display "successful" information on the console

for (( i=0;i<$ticket_no;i++))

do

ticket_id=$(awk -v var="$line" 'NR==var {print $0}' /tmp/output | cut -d ">" -f 2 | cut -d "<" -f 1)

curl -s -D - -X POST --data "scanticket_pkey=$ticket_id&status=justified&remarks=No+Impact&submit=Update" \

-b /tmp/cookies.txt --referer https://svms.nus.edu.sg/ticket/edit-ticket-form?scanticket_pkey=$ticket_id \

https://svms.nus.edu.sg/ticket/update-ticket | if grep -q "has been updated successfully"; \

then echo "updated Ticket ID: ${ticket_id} successfully"; fi

line=$((line+skip))

done

To run the code in Ubuntu Linux:

sudo chmod a+x curl_automation_nussvms.sh ./curl_automation_nussvms.sh

Steps

- Login to get cookies from the server

- Request the server to get ticket ids by using obtained cookies

- Obtain the start line number of the HTML response so we can parse the ticket id. Also count how many ticket id we have to deal with.

- Send update requests to the server repeatedly until the last ticket id is updated.

Explanation

The curl, cut and grep commands are easy to understand by issuing “man curl” and “man grep” to read the help documentations.

The awk command used built-in variables “NR” (Number of Records Variable) and map it with html response line number.

ticket_id=$(awk -v var="$line" 'NR==var {print $0}' /tmp/output | cut -d ":" -f 2 | cut -d " " -f 1)

It also used “-v” parameter to pass the shell/bash script variable “$line” into awk as a new variable “var“.

NOTE

If you want to use this script on other websites for automation purpose, you may need to modify the request URI and text match/find patterns in the code to make it working.

Happy coding!